I had a chance to give an overview of machine learning to a “leader” – think executive at a big company. This person is world class at what they do, but has no understanding of machine learning, and fears they are getting left behind.

And it was an eye-opening experience for me. Think about it. Machine learning is hot. The news is full of amazing stories – beyond human level – successes with ML. What if a competitor gets there first? There’s a lot of pressure for a leader to make some good decisions.

And it’s not easy to know what to do. People throw around buzzwords, deep-boosted this, reinforcement BERT-ing of that, Bayesianized sigmoidization of neural activizationing, and blah-bla-die blah-da day. Ask a researcher, they’ll tell you how their latest technique is the linchpin to success and everyone else has been getting it wrong all along; ask someone just out of school and they’ll ask you where the training data is at; ask someone with a lot of experience, and, well, you can’t, because one of the big tech companies already hired them.

Where is the bridge between this potentially amazing tool, and a good decision about if and where to invest in it?

What’s needed is the ability to move beyond asking ‘can I model that?’, and starts asking the question ‘should my organization model that?’ I call this type of thought: machine learning architecture.

And you don’t have to be super technical to be a great machine learning architect. Just think of it like any other investment a business could make. Should we buy a second delivery van? Well, is a van the right tool to solve the problems we’re having? Can we adapt our business to properly leverage it? What will it cost to run it month over month?

Basic questions, but they require understanding the strengths and weaknesses of machine learning in a kind of deep way and they require popping up and understanding the context. I’m going to go through three questions you can ask to start thinking like a machine learning architect:

- Is machine learning the right way to solve the problem?

- How do machine learning systems integrate with exiting systems?

- What is the cost to build and run the ML system over time?

Is machine learning the right way to solve the problem?

If you’re writing software for a bank to deal with withdrawals, you could use machine learning. You’ll have tons of training data, endless logs of transactions with info on: balance before, withdrawal amount, new balance. A simple regression problem…You could probably even get to like 99.5% accuracy if you worked at it hard enough…

Or you could write one line of code: newBalance = oldBalance – withdrawlAmount;

A bit of a silly example. But the point is that machine learning isn’t right for every problem.

A machine learning architect will have a good understanding of the properties that makes machine learning an efficient approach to solving a problem. Here are a few to get you started:

- The problem is very large. Like if you have to organize tens of millions of web pages or pictures or social network posts and it’s just too much to do manually. Think about it, there are more web pages than 100 people could examine in their lifetime, more than 1000 people could. When a problem is huge, machine learning might be the right answer.

- The problem is open ended. But there are more books, buildings, products, people, and, well – stuff – every day. If you need to constantly make decisions about new things and it’s just not practical to keep up, machine learning might be the right answer.

- The problem changes. What’s worse than building an expensive system once? Building it every week, over and over, forever. We’re living through a huge change right now. Every business projection and decision process designed in 2019 is out the window for 2020. Machine learning isn’t a magic bullet for dealing with change, but it can make it faster and cheaper to adapt.

- The problem is hard. Things like human level perception, or where humans need some serious expertise to succeed. Think about a game like tic-tac-toe. Anyone can become ‘world class’ at that game by learning a few simple rules. Tic-tac-toe is not hard enough to need ML. Contrast this to chess, where experts are much, much better than beginners – that’s a hard problem and ML might help.

A machine learning architect will identify one of these four properties in a problem before suggesting machine learning. In fact, they’ll probably see several of them. If not, they’ll probably find a cheaper and more reliable solutions without machine learning.

How do machine learning systems integrate with your existing systems?

One of the great Program Managers I had the pleasure to work with used to say: Machine learning is an approach, it isn’t a solution. A machine learning architect will understand how the machine learning approach they select complements and is supported by the approach taken by their existing systems.

And there are several important approaches to machine learning. I call them Machine learning design patterns.

One important ml design pattern is called corpus based, where you invest in creating a data asset and leveraging it across a series of hard (but not-time-changing) problems. When I worked on the Kinect, we took a corpus based approach, collecting tons of data and carefully annotating it for many different uses.

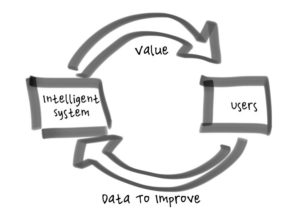

Another important ml design pattern is called closed loop, where you carefully shape the interactions your users will have your system so that they automatically create training data as they go. I used a closed loop approach when working on anti-abuse systems, where an adversary changed the problem every day, so there was much less value in building up a long-lived corpus.

I’ll provide links to videos about these two important ml design patterns (corpus based, closed loop), including a breakdown of their properties, and a walk-through of case studies.

A machine learning architect will be familiar with the pros and cons of the common machine learning design patterns. They’ll know how each design pattern could interact with their current systems & processes, which match well, and which would require major rework.

What is the cost to build and run the ML system over time?

Building an ML system is easy! Just install python, maybe pytorch, a bit of feature engineering, a few days of tuning, then compile the model into your current app and add ‘proven machine learning expert’ to your resume…right?

Well, that’s one way to do it, but if you’re doing it that way, you’re not thinking like a machine learning architect.

To be most valuable, machine learning needs a lot of support, and if you’re not building that support, you probably didn’t need machine learning to begin with.

This include things like telemetry systems, automated retraining, model deployment and management systems, orchestration systems, and client integrations.

You probably won’t have to invest in all of these to make efficient use of machine learning, but a machine learning architect would understand how important they are (given the ml design pattern they’d selected) and how much work it would be to add them to their existing systems.

For example, you’ve got to be realistic about mistakes, because any machine learning based system is going to make mistakes. Wild, and crazy mistakes. Like you know how when a human expert makes a mistake, they are usually at least kind of right? In the ballpark? Because they pretty much know what’s going on? Well, machine learning isn’t like that. Machine learning makes bat-zo-bizarro mistakes.

So ask yourself, how does your existing system interact with the mistakes you expect? Are the mistakes easy to detect and mitigate? Or will you have to change your existing workflows to identify and mitigate the problems that ML will create?

So there are three steps to thinking like a machine learning architect: Do you have the right problem; how does machine learning integrate with existing systems; and what does it cost to build and run over time.

And developing the base skills to think this way can be valuable to anyone involved in machine learning systems, not just the machine learning scientist. So If you’re a machine learning professional, a engineering manager, a technical program manager, or even that leader I got a chance to talk to, who is trying to figure out if and how to invest in machine learning…

You can learn a lot more by reading this book, building intelligent systems. Or by subscribing to my YouTube channel.

Good luck, and stay safe!

Closing the loop is about creating a virtuous cycle between the intelligence of a system and the usage of the system. As the intelligence gets better, users get more benefit from the system (and presumably use it more) and as more users use the system, they generate more data to make the intelligence better.

Closing the loop is about creating a virtuous cycle between the intelligence of a system and the usage of the system. As the intelligence gets better, users get more benefit from the system (and presumably use it more) and as more users use the system, they generate more data to make the intelligence better. In my decade of managing applied machine learning teams I’ve interviewed maybe a hundred people. Over that time, I’ve come to rely on two main questions. I’m going to tell you what they are.

In my decade of managing applied machine learning teams I’ve interviewed maybe a hundred people. Over that time, I’ve come to rely on two main questions. I’m going to tell you what they are.